With the fast growth of AI, we also observe new design patterns, heuristics, and anti-patterns that shape the AI experience.

Fortunately, instead of becoming an overnight AI design expert by looking at what others did, I could actually work on a AI related project.

Here’s what I learned from implementing natural language AI-search into Archilogic product.

Background

Unlike the static polylines or image files our competitors offered, Archilogic’s graph-based data model allowed us to leverage AI for deeper insights into customers’ real estate portfolios.

We included ‘AI readiness’ as part of our value proposition. People wanted to use AI, and some of our customers expressed interest in the early prototypes.

Ever since I joined I complained that our existing filters for floors listing were not very advanced, and having something that could address that made a lot of sense.

As a facility manager, I need to access information about floors more easily and quickly.

Using natural language to ask questions about floors resonated with the principle we had for the product: to make spatial information more accessible and human-friendly.

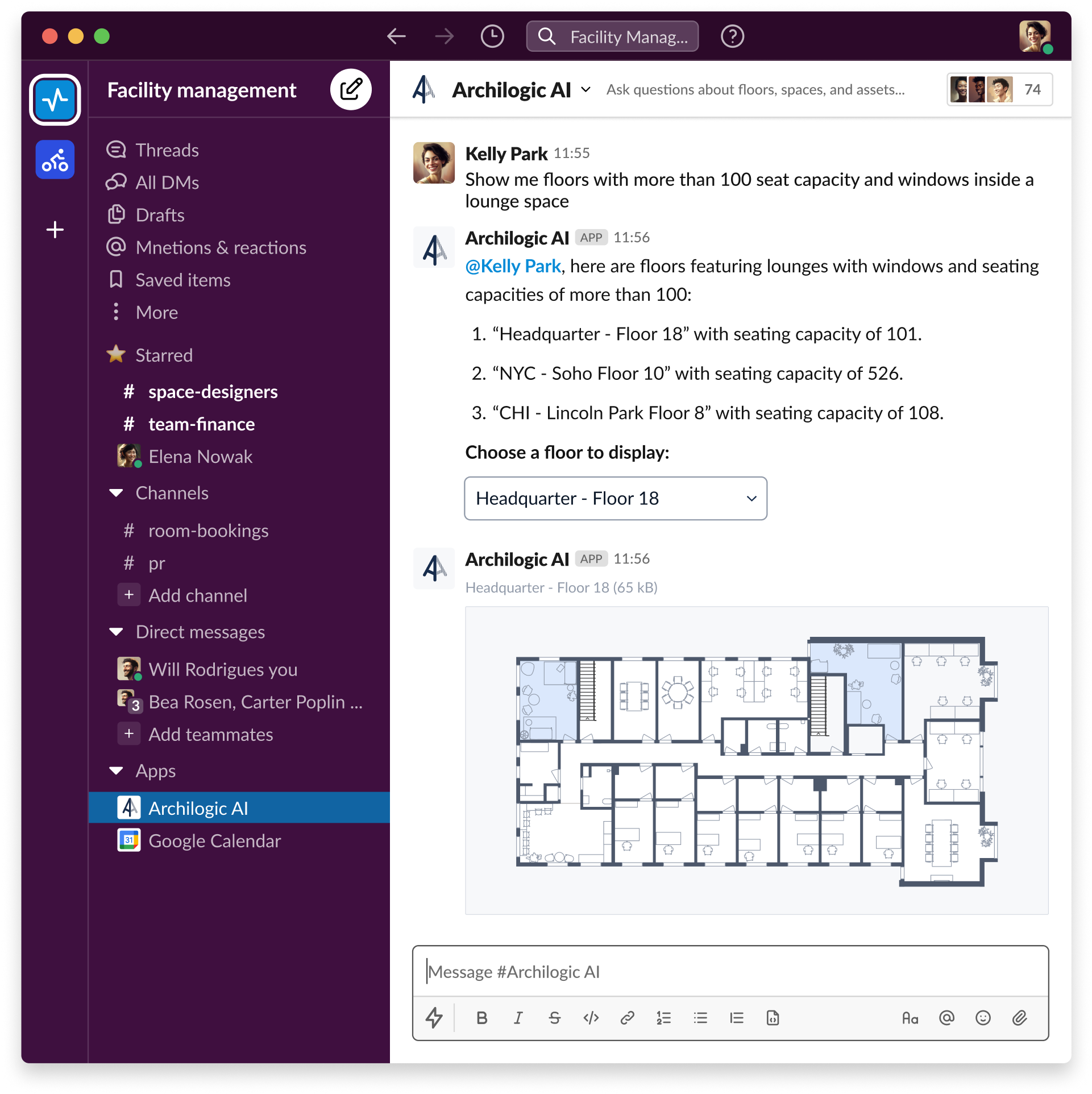

Our natural language search in its present form. See in hi-res.

The work before the work

With some projects, the amount of work done before the project even starts equals or is often greater than the actual work done during the project.

This was the case here. Before considering the introduction of AI-enabled search to our product, we experimented with various prototypes, showcasing how AI could be used with our data model.

Frederic, our VP of engineering, built a simple search prototype in Retool, and I designed a rough conversational prototype.

On the backend side, developers worked hard to deliver a GraphQL API for which LLMs could generate queries.

We began testing Anthropic’s Claude (LLM used in Notion) and ChatGPT to try how conversations around the API could feel and if they could somehow be integrated into the product.

The results were really promising.

At the time, I wasn’t 100% sure if defaulting to a conversational interface would work for the Dashboard (the app that managed floor plans).

I had already heard stories about how integrating AI chat assistants, completely separate from the existing experience in a product, backfired for some companies.

However, testing the conversational approach did help us build an Archilogic AI Slack app further down the road.

Seamlessly integrating the AI experience into the product became a priority for me.

It was supposed to improve the already existing searching experience instead of completely replacing it.1

But, in order to do that, we had to decide which questions/queries we wanted to support and, as a consequence, how and where we wanted to display the answers.

Questions to answer

We collected a huge list of questions our customers could ask. From my perspective all fell into three main categories:

- High-level prompts about the entire portfolio that return a number (or several numbers) as an answer.

- Prompts about floors: those list floors fulfilling certain criteria as an answer.

- Prompts about spaces and assets: those highlight relevant elements on the floor plan in the floor details as an answer.

The last two categories naturally aligned with our Dashboard:

- The floors list view was where you managed floors, so that’s where you should ask for floors.

- The floor details view was where you inspected spaces and assets, making it feel right to ask for them there.

As for high-level prompts, I figured that some sort of global app search would have to be introduced.

As our GraphQL API already worked with floors we decided to start with them, and then follow with spaces and assets.

Visual cues

With the scope narrowed down, I could start diving deeper into experience details.

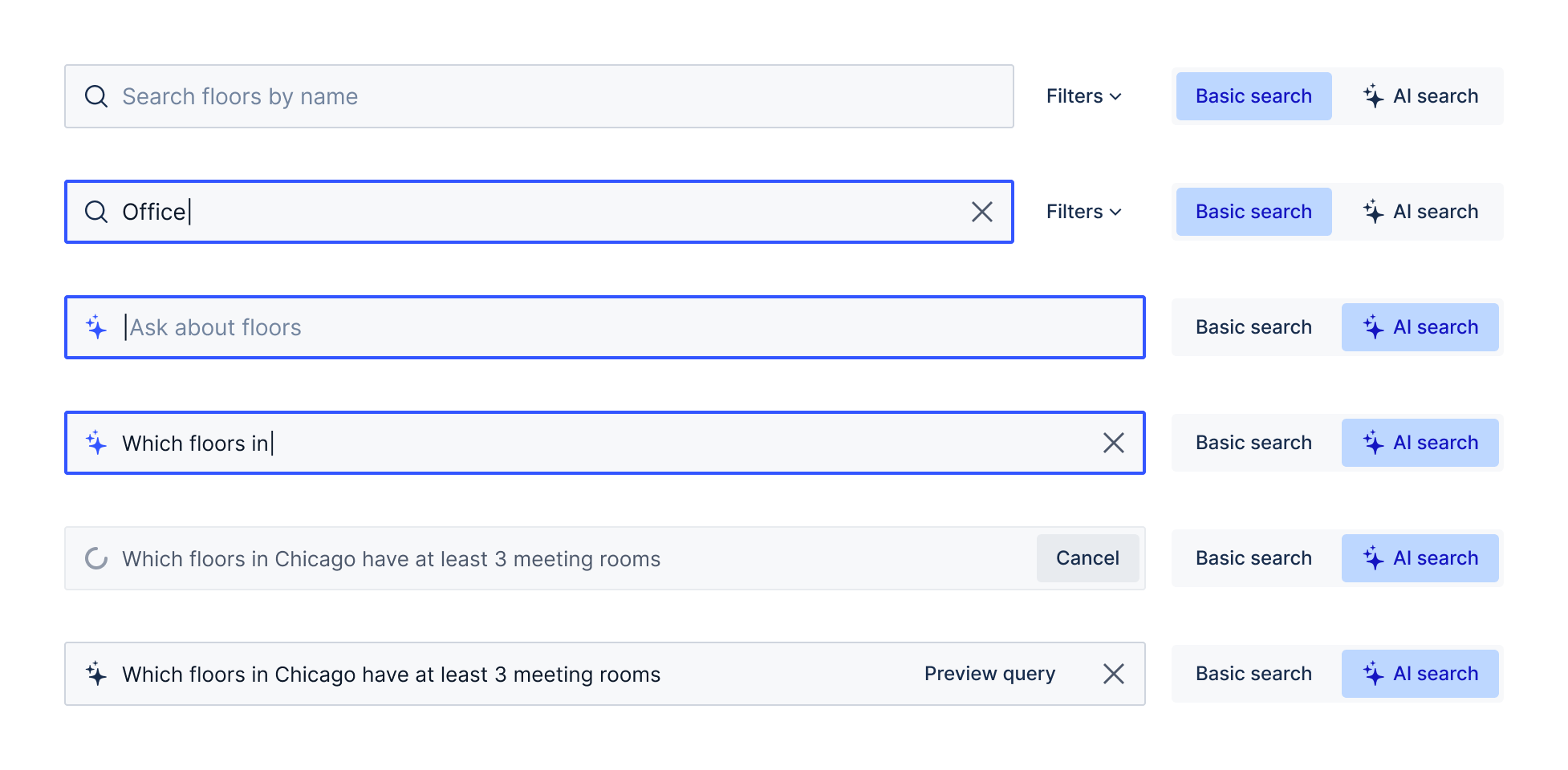

Experimenting with the input fields for the AI search.

Firstly, I focused on helping users identify AI features. I used the most ubiquitous sparkling star icon as a visual indicator.

These icons were intended to be inserted on buttons but also placed inside the input fields.

For the first version, I opted for clarity and precision, specifying the type of search (basic or AI) our users would use.

The blank canvas dilemma

For as powerful as LLMs are, they suffer from the common ‘blank canvas’ problem. They can do so much, but users often don’t know where to start.

I tried to mitigate that. For placeholders in the input fields, we introduced a pattern where ‘Ask about’ was followed by the intended scope of a particular AI search.

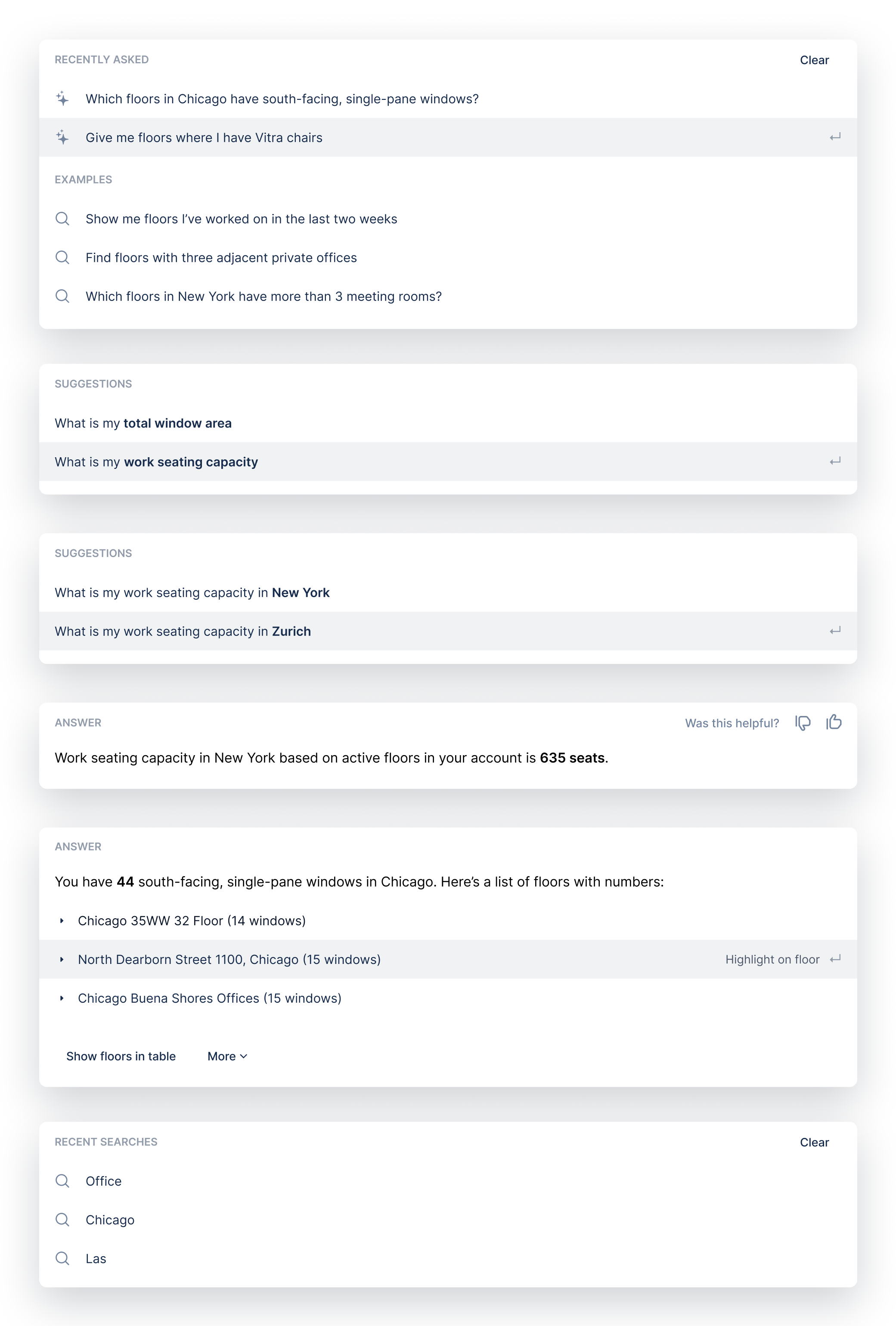

When users focused on the field in the floors list, the panel with recently asked questions (prompts) and examples was shown.

Experimenting with the panels for the AI search.

Initially, I had the idea to show auto-complete suggestions while typing, but the effort was too big from a technical point of view.

Building query and fetching results

One important thing I had to figure out was what should happen after users hit enter.

Under the hood, when the enter key was pressed, the LLM began preparing a query that was then executed, and GraphQL returned results. With 140 models in the account the LLM part took 3-5 seconds and the GraphQL query 1-2 seconds.

The loading process in the UI had to be prepared with possible significant delays in mind. I believed that decoupling loading indicators for both parts is what we should do.

We used a spinner in the input field for the LLM part (preparing query) and skeleton loaders for the GraphQL query part (fetching floors).

It decoupled error handling in the interface and made the UI more responsive during waiting.

Refining prompts

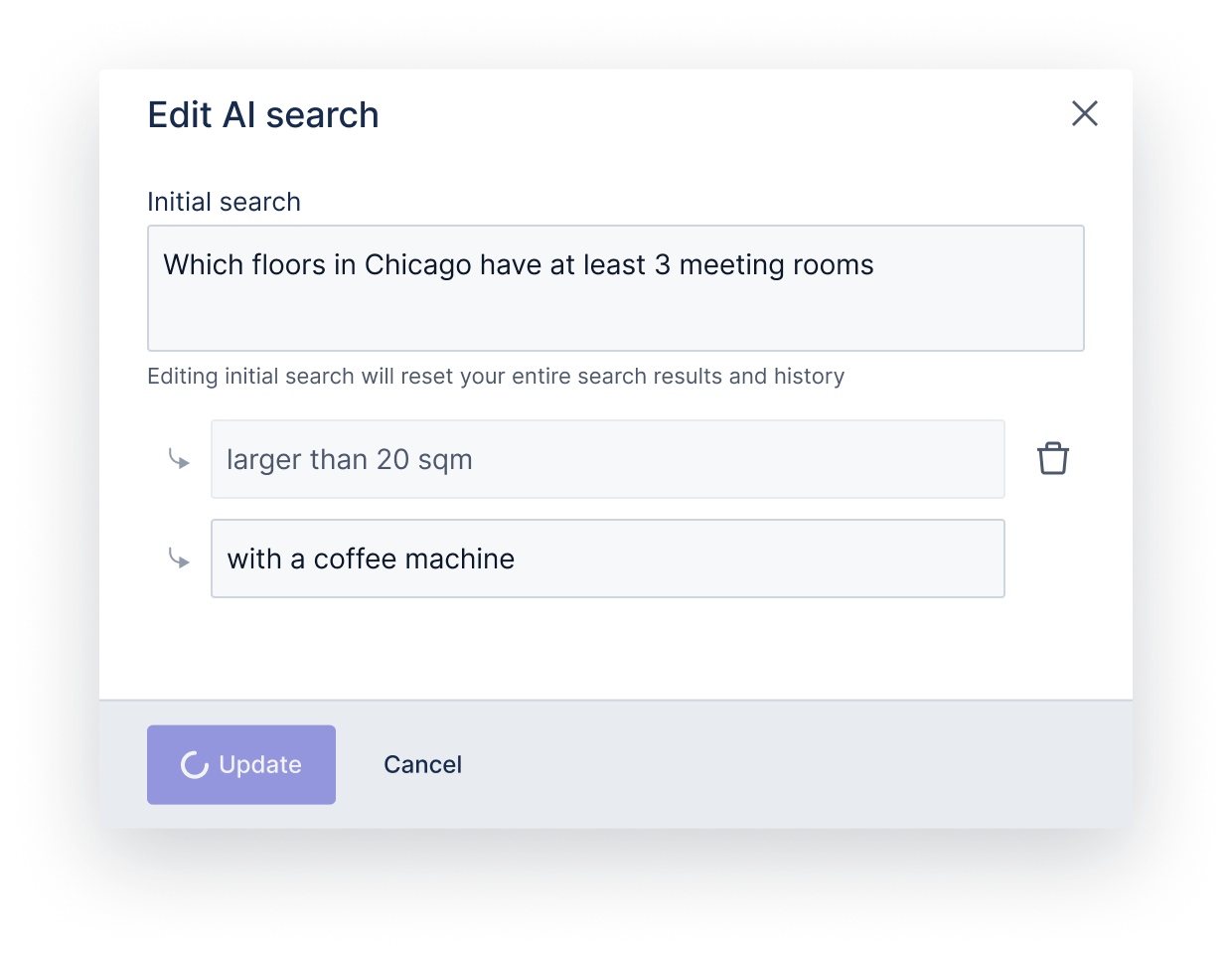

I believed that users needed tools to refine their prompts conveniently.

My gut feeling was that if a user asks, “Which floors in New York have more than 3 meeting rooms?” and then wants to add a bit more depth by typing “larger than 30 sqm”, they should be able to do so somewhere. They can click “apply” to see the results and quickly remove the changes with a mouse click if that’s their need.

I designed a tuning interface that allowed this without forcing users to manually edit prompts in the input field.

However, for the first version, we had to prioritize accuracy, so we decided to leave it out.

Conclusion

There’s nothing more frustrating than something that looks nice but doesn’t work. Training the LLM to be accurate is a time-consuming job, and there’s always that one question you haven’t thought about.

I added an option to preview the query to allow the team to verify the result and make improvements accordingly. All of this brings us closer to releasing this great feature.

From a designer’s point of view, blindly following AI patterns will get you nowhere. Each product is different and at a different stage with its AI tooling. You have to keep exploring different options but implement something that makes the most sense for your product in its current shape.

It’s never too good to have your product too dependent on another company’s api. I got reminded about that later when 550 of 700 employees @OpenAI told the board to resign ↩︎